THE PROBLEM

What is the persistent problem in the data ecosystem?

On average, easily more than a few weeks are spent on tackling one simple upstream change event. Moreover, the revenue stream is directly affected if the issue impacts popular data pipelines.

What do Data Personas want?

As a data producer, I want to produce data strategically to complement business requirements. But, I don’t want to be constrained during data generation since it obstructs the speed and richness of data.

As a business user or data consumer, I want quick access to reliable data that adheres to my business/use-case requirements without week/month-long iterations with data teams. I want to use high-quality data at the right time to achieve maximum impact on fast-paced business decisions.

The outcome of such disparate communication between data personas and users results in:

🌪️ Unmanageable data ecosystem

🥪 Sandwiched data engineers

💪🏼 Lack of data ownership or accountability

Without any direct consensus between data producers and data consumers, the data lack schema fit, governance, quality, and thereafter, reliability.

THE SOLUTION

What is the least non-disruptive solution?

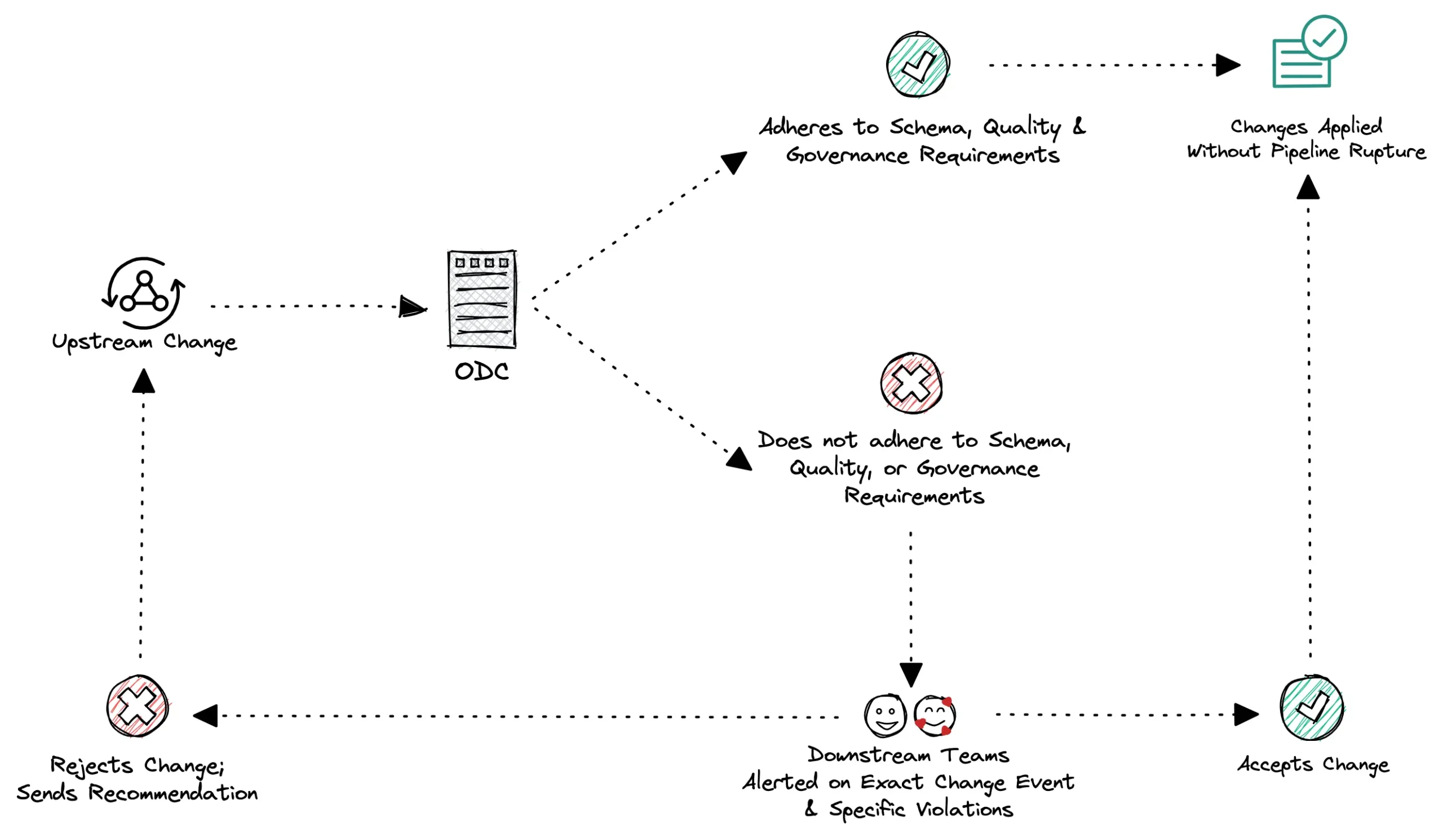

To enable high-quality and trustworthy data that truly impact business, the data ecosystem needs to imbibe the concept of API handshakes. We call these handshakes Data Contracts. Data Contracts give back the ownership of data semantics to businesses, enabling data that is rich with business context, democratized, declarative, and highly operable.

WHAT

What are Open Data Contracts?

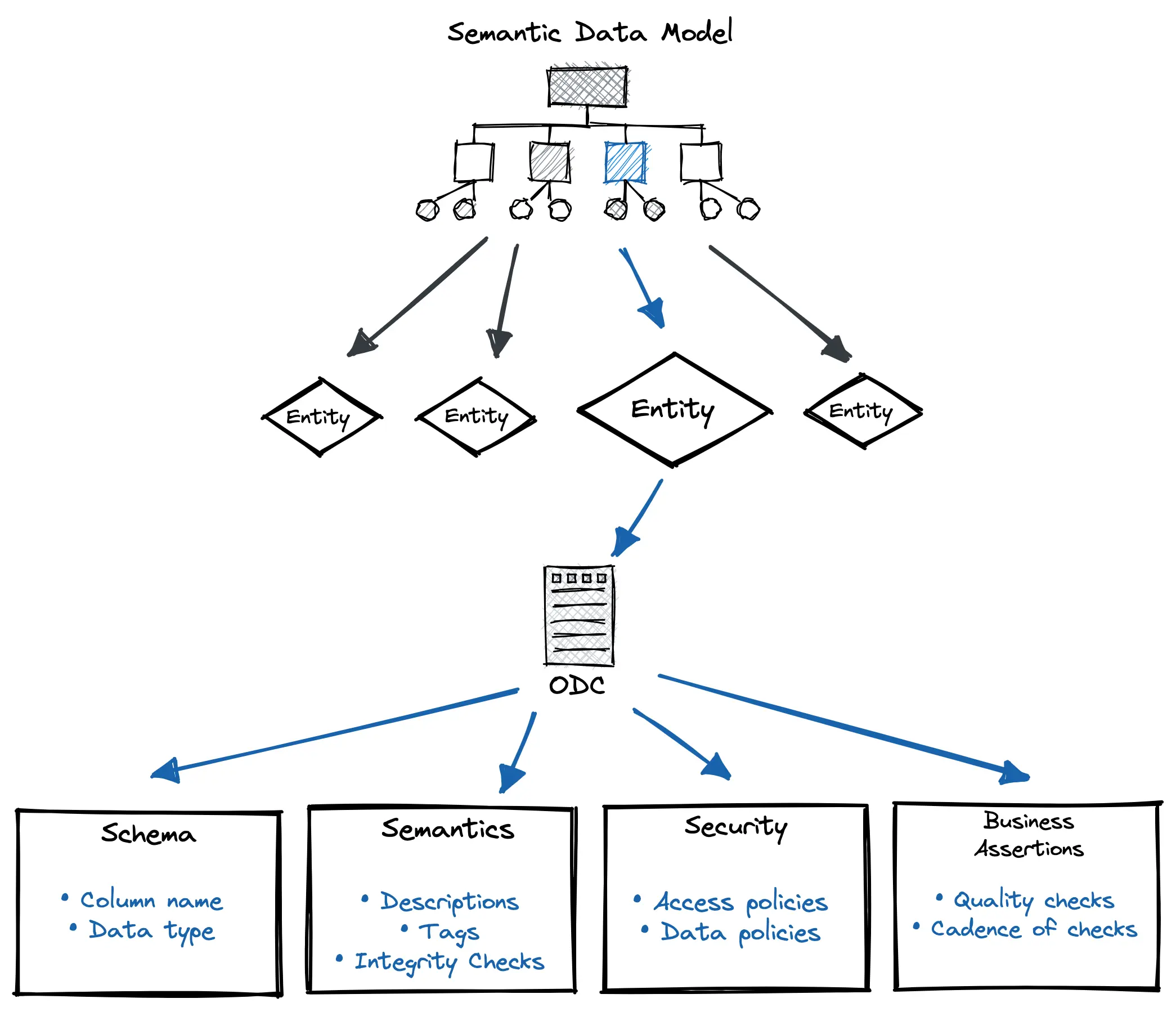

The Four Cornerstones of ODC: Expectations fulfilled by contracts

| Specs | Description |

| Schema | • Column name • Data type |

| Semantics |

• Entity and column description |

| Security | • Access policies • Data policies |

| Business assertions | • Quality checks • The cadence of checks |

WHY

Why Implement Open Data Contracts?

Manageable Data Ecosystem

Concrete and Adaptable Data Pipelines

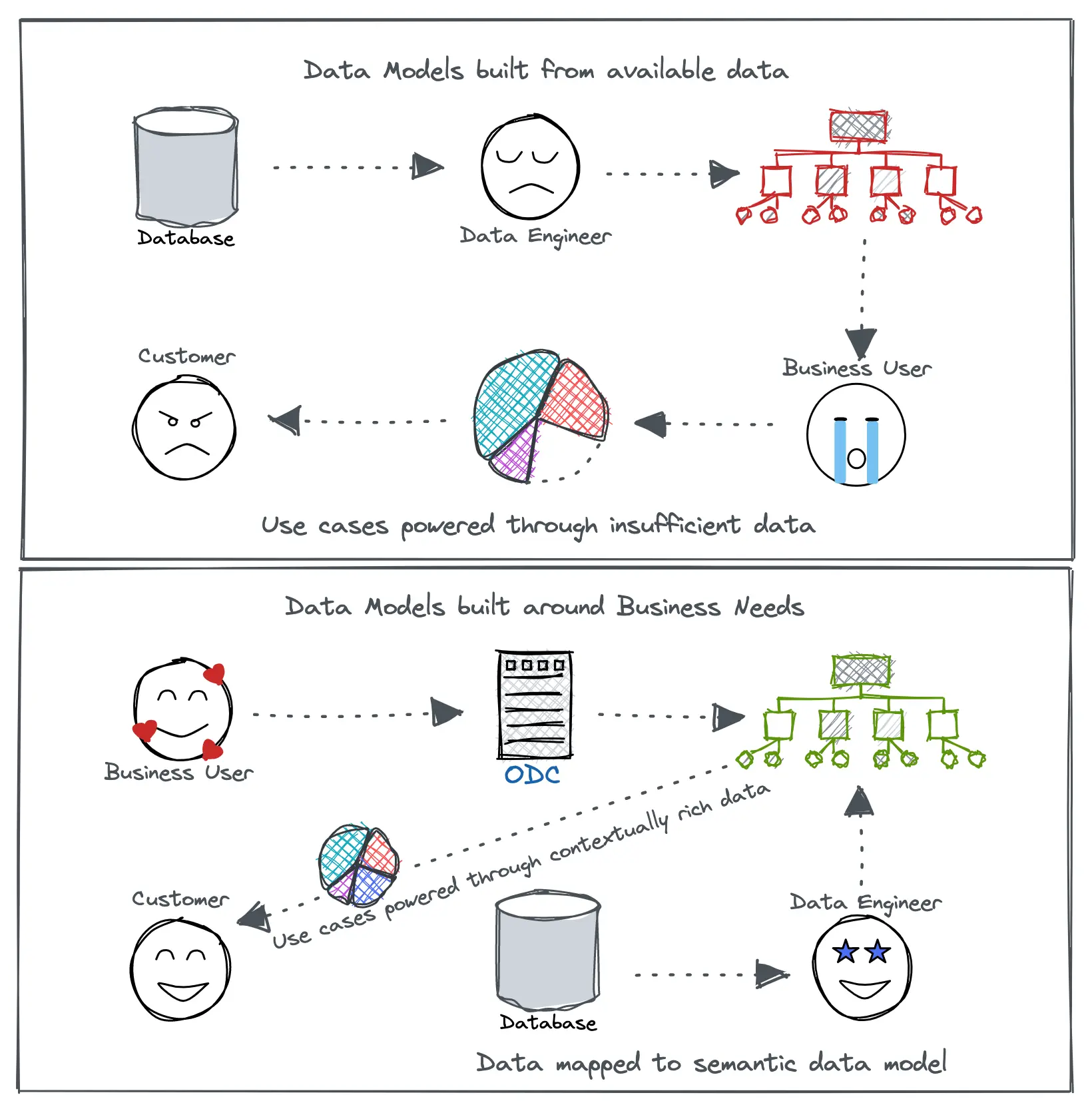

Bridge between Business Logic and Physical data

Optimizing Data Modeling

Happy Data Engineers

High Data Quality

HOW

How to Implement Open Data Contracts?

ODC Landscape

| Objects in the Landscape | Description |

| Dataview | Set of data points. Dataview acts as a bridge between physical data and contracts. It can reference a table stored in any lakehouse, a topic from Kafka, a snowflake view, a complex query extracting information from multiple sources, etc. |

| Contract | It contains strongly typed data expectations like column type, business meaning, quality, and security. Think of them as guidelines to which a Dataview may or may not adhere. |

| Entity | Logical representation of a business object, mapped by a Dataview adhering to a specific contract with a certain degree of confidence. It also contains information on what can be measured and categorized into dimensions. |

| Other Entity Specs | Description |

| Dimensions | Categorical or time-based data helps in adding context to the measures. Users can slice and dice measures by dimensions to get deeper insights. |

| Measures | Aggregated values across dimensions. Dimensions here can be internal as well as external dimensions belonging to other entities. The formula or description for measures should be expected from the persona building the Dataview. |

| Relations | List of 1:1, 1:Many, or Many:1 relations with other entities to enable calculations and eventual analysis of Measures and metrics. |

Federated responsibilities

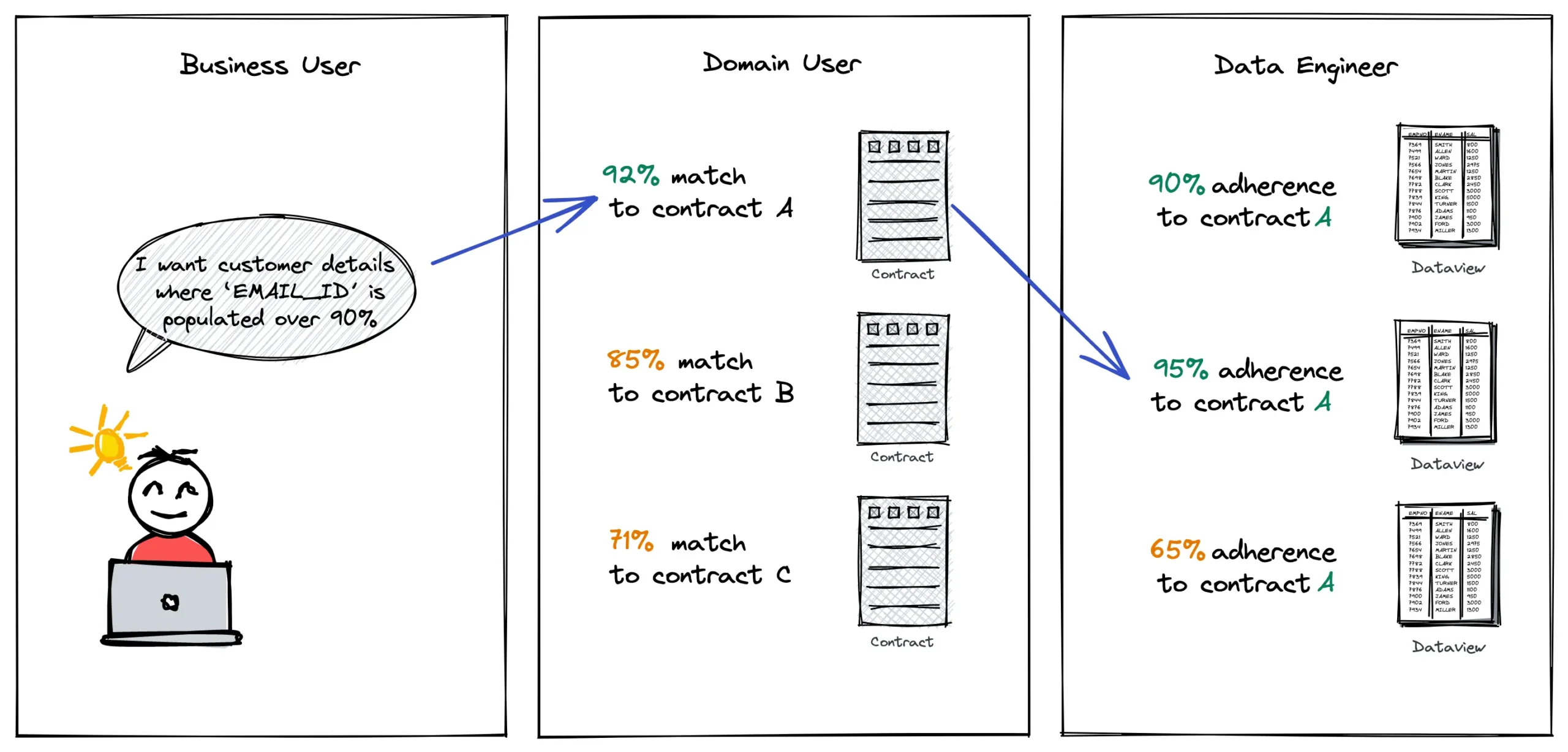

Dataview, Contract, and Entity, each fall under the ownership of different personas. However, no persona is restricted from setting up either of the components. The boundary is loosely defined based on the typical role of three different personas:

| Persona | Responsibility |

| Data Engineer | • Data engineers who work with actual physical data can independently produce Dataviews without any obstruction from Contracts. • Data engineers can refer to Contracts as a guideline to produce Dataviews that adhere to the requirements from the business end. • Data engineers can run CRUD operations on Dataviews to progressively increase percentage adherence to a Contract or a bunch of Contracts. |

| Domain User | • Domain users are responsible for defining Contracts since they own domain knowledge. • The domain structure is backed by a data mesh architecture that supports domain ownership by giving control of niche data to domain teams. • The allocation of the contract’s responsibility to domain teams removes the bottlenecks on central data teams, which are now solely responsible for the central control plane that takes care of governance, metadata, and orchestration. |

| Business User | • Business users are responsible for defining Entities with three attributes: Measures, Dimensions, and Relations. • The responsibility for these three features falls on Business teams since they directly work with data applications on the customer side and are aware of the connections, equations, and columns that have to be operationalized for specific business outcomes. • The business user can create queries matched with a set of contracts and dataviews. Contracts with the highest match to the query are surfaced, and project the Dataviews with the highest match with the respective contracts. |

Contracts & The Three-Layered Data Operating System Landscape

The role of Contracts integrates with each layer to facilitate a governed and high-quality exchange of data across these layers.

| DOS Layers | Contract Implementation |

| Data Layer | You establish a connection with your heterogenous, multi-cloud data environments to make data addressable consistently. The layer provides users access to data via a standard SQL interface, automatically catalogues the metadata, and implements data privacy using the ABAC engine. |

| Knowledge Layer | With dynamic metadata management capabilities, DOS identifies associations between data assets and surfaces up the lineage. Auto-classification finds the hidden business meaning in the datasets and propagates such information, including sensitive identifiers, to downstream consumers. All of the information is stored in a graph structure to utilize the network effect, and with open APIs, users can augment the data programmatically. |

| Semantic Layer | A semantic layer capable of accessing and modelling data across disparate sources. It allows you to view and express various business concepts through different lenses. Users can also define key business KPIs, and the system automatically surfaces anomalies around them. With contracts, business users can express their data expectations and enforce them while modelling business entities. |